Let’s cut to the chase. We are building engines we don’t fully understand.

We have reached a point where AI can write code, diagnose rare diseases, and drive cars on highways. But if you ask a deep learning model why it denied a loan application or why it swerved left instead of right, it usually can’t tell you.

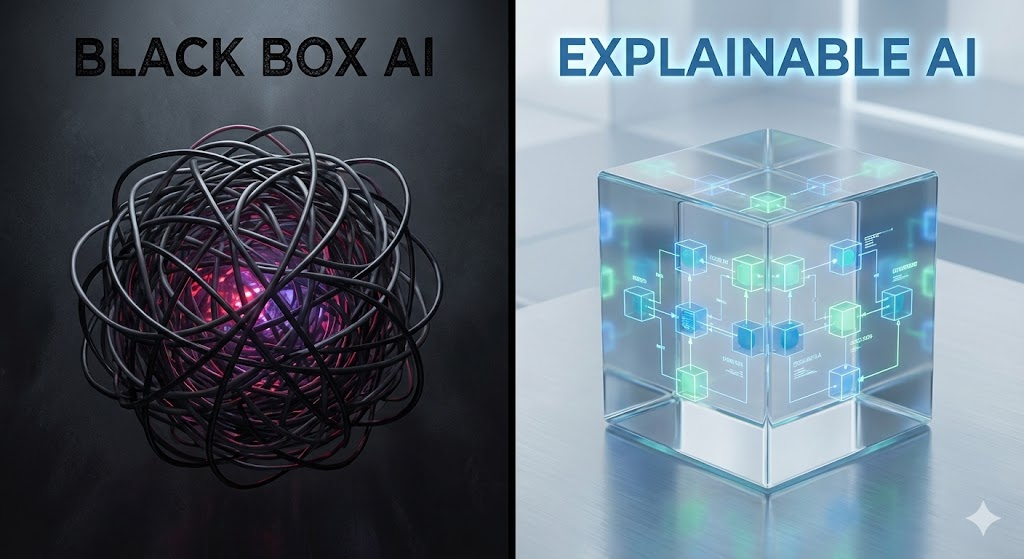

This is the “Black Box” problem. And until we solve it, AI will remain a novelty rather than a reliable foundation for society.

Trust isn’t a feeling; in engineering, trust is a measurable metric consisting of transparency, robustness, and accountability. Here is the researched-backed technical blueprint on how we actually build it.

1. The Data Supply Chain: You Are What You Eat

Most AI failures aren’t coding errors; they are data errors.

If you train a model on ten years of hiring data from a male-dominated industry, the AI will mathematically deduce that being male is a qualification for the job. It’s not being sexist; it’s being statistically accurate based on flawed inputs.

To build trust, we have to treat data like a supply chain.

- Data Lineage: Just as you want to know if your vegetables are organic, developers need to know the provenance of their data. We need “Datasheets for Datasets”—standardized documentation that details exactly where data came from, its original purpose, and its known gaps.

- Synthetic Data Injection: Sometimes, the real world is too biased to be useful. Researchers are now using synthetic data—artificially generated information that mimics statistical properties of the real world—to balance out datasets. If your dataset is 90% English speakers, you don’t just hope for the best; you synthetically generate edge cases to force the model to learn diversity.

The Takeaway: You cannot build a fair machine on unfair history. Audit the data before you write the code.

2. XAI: Breaking the Black Box

“Computer says no” is no longer an acceptable answer.

Explainable AI (XAI) is the field dedicated to making the internal logic of a machine understandable to humans. If a doctor uses AI to recommend surgery, they need to know which symptoms triggered that recommendation.

There are two main ways to achieve this:

- Post-Hoc Interpretability (LIME & SHAP):These are tools that run after the AI makes a decision. Imagine an AI classifies a photo as a “Wolf.” A tool like LIME (Local Interpretable Model-agnostic Explanations) will obscure parts of the image to see what changes the decision. If hiding the snowy background makes the AI think it’s a “Dog,” we know the AI wasn’t looking at the animal at all—it was cheating by looking at the snow.

- Glass-Box Models:For high-stakes fields like criminal justice or credit scoring, we may need to abandon complex Neural Networks entirely in favor of “Generalized Additive Models” (GAMs). These are less hype-driven but allow humans to trace the exact mathematical weight of every variable.

3. Adversarial Robustness: The “Stop Sign” Test

AI is incredibly smart, but it is also incredibly brittle.

In a famous study, researchers put a few small pieces of tape on a Stop sign. To a human, it was still obviously a Stop sign. To the computer vision system of a self-driving car, the tape tricked the algorithm into identifying it as a “Speed Limit 45” sign.

That is a trust-killer.

To fix this, we need Adversarial Training. This involves hiring “Red Teams”—engineers whose sole job is to attack the AI. They feed the model “poisoned” images, confused audio, and logical paradoxes during the training phase. We have to break the AI in the lab so it doesn’t break in the wild.

The Rule: If your AI hasn’t been attacked by its creators, it isn’t ready for the public.

4. Human-in-the-Loop (HITL) Architecture

The final layer of trust isn’t code; it’s protocol.

For the foreseeable future, AI should operate as a co-pilot, not the captain. This is called Human-in-the-Loop architecture.

In this model, the AI does not execute a decision; it provides a recommendation with a confidence score.

- Low Confidence (e.g., 60%): The system flags the item for human review immediately.

- High Confidence (e.g., 99%): The system automates the task, but a human audits a random sample of 5% of these decisions to ensure drift hasn’t occurred.

This creates a safety net. It acknowledges that the machine is a tool for calculation, but the human is the vessel for accountability.

The Verdict

Building trustworthy AI is hard work. It requires slowing down. It requires spending money on auditing data rather than just buying more GPUs.

But the alternative is a world where we are governed by algorithms that we cannot question, understand, or correct. That isn’t progress; that’s just efficient chaos. We have to build the brakes before we build the engine.

Thanks for reading, This is Abhee, Thanks for reading. I will catch you in the Next one!